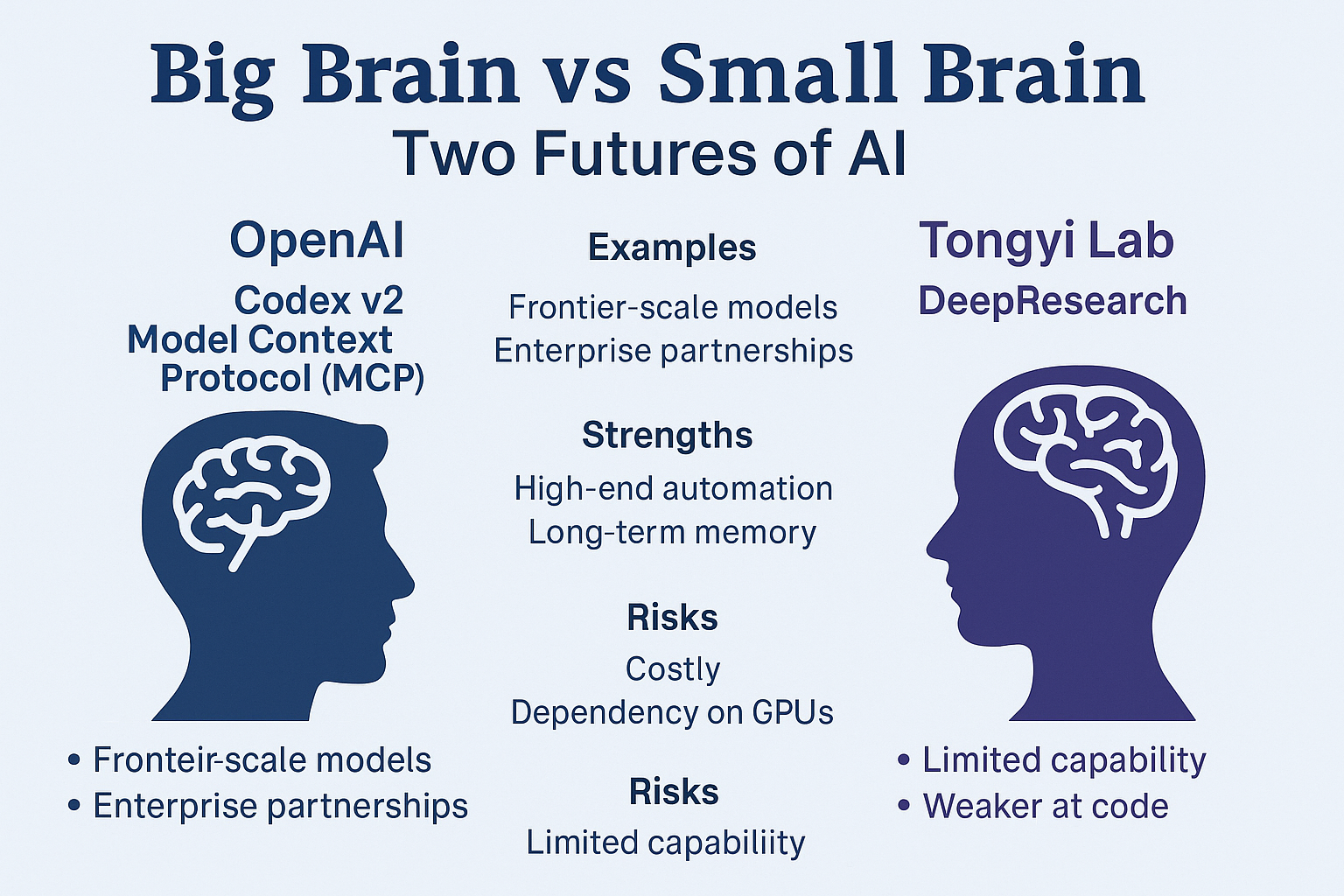

In the race to define the future of artificial intelligence, two radically different strategies are beginning to crystallize. On one side is OpenAI, doubling down on frontier-scale models, enterprise partnerships, and high-end infrastructure like Codex v2 and the Model Context Protocol (MCP). On the other side is Tongyi Lab, the Alibaba-backed group that just released DeepResearch, an open-source web agent running on a lean 30B parameter model (with only 3B active).

These approaches—big brain vs small brain—don’t just represent different technical paths. They illustrate two competing philosophies of how AI will be built, deployed, and monetized. And together, they might signal a split in the global AI ecosystem as stark as iOS vs Android.

OpenAI’s Big Brain: Codex and MCP

Codex v2: AI Inside the Developer’s Workflow

In September 2025, OpenAI officially launched Codex v2: longer context windows, faster inference, and inline code support across multiple programming languages. It’s positioned not as a research tool but as an enterprise coding assistant, tightly integrated with IDEs and workflow automation systems.

Codex doesn’t just autocomplete code. It understands entire repositories, can refactor across files, and integrates with build/test pipelines. In other words, it’s the AI arm of OpenAI’s enterprise play: helping teams accelerate software development at scale.

MCP: The Glue of Enterprise AI

Alongside Codex, OpenAI is pushing the Model Context Protocol (MCP). Unlike Codex, MCP isn’t a standalone product. It’s a protocol layer—a way for applications, agents, and models to share context seamlessly.

Think of it as the Android of enterprise AI. If Codex is the arm, MCP is the memory:

- Persistent context across apps and sessions.

- Shared knowledge between different AI agents.

- Long-term organizational memory that survives beyond single queries.

If MCP succeeds, it could anchor OpenAI deep inside enterprise workflows—ERP, CRM, even sovereign AI infrastructure projects.

Strengths and Risks

- Strengths: unmatched power for enterprise automation, sticky integrations, strategic partnerships (Oracle, Microsoft, UK sovereign AI deals).

- Risks: GPU scarcity, high costs, slower adoption (MCP requires months of integration), vulnerability to regulatory pushback.

OpenAI’s play is high-risk, high-reward. But if it works, it locks in governments and Fortune 500 companies for decades.

Tongyi Lab Small Brain: DeepResearch

The Open-Source Challenger

Tongyi Lab, part of Alibaba’s AI ecosystem, has taken a radically different route. Its newly launched DeepResearch is a fully open-source web agent that already demonstrates performance on par with OpenAI’s Deep Research benchmark.

Here’s the twist: it does so with only 30B parameters (3B activated)—a fraction of OpenAI’s frontier models. Benchmarks show:

- 32.9 on Humanity’s Last Exam (academic reasoning).

- 45.3 on BrowseComp (complex web research).

- 75.0 on xbench-DeepSearch (user-centered search).

For a lightweight model, those numbers are impressive.

Why It Matters

Tongyi’s approach is about democratization:

- Cheaper to run, even without access to NVIDIA’s top GPUs.

- Open-source means faster iteration by the community.

- Accessible to universities, startups, and governments in low-resource regions.

Strengths and Risks

- Strengths: low cost, broad accessibility, grassroots adoption, resilience under compute restrictions (like China’s ban on NVIDIA chips).

- Risks: limited capability for enterprise automation, weaker at code reasoning, vulnerable if frontier APIs drop prices.

Tongyi is betting that scale isn’t everything. By focusing on efficiency and openness, it hopes to build an ecosystem that spreads wide, even if it doesn’t go as deep.

Big Brain vs Small Brain: Who Wins?

The contrast couldn’t be sharper:

- OpenAI = iPhone → premium, high-end, deeply integrated, but expensive and closed.

- Tongyi = Android mid-range → open, cheaper, flexible, spreads faster in regions with limited compute.

History suggests both strategies can coexist. Apple didn’t crush Android, and Android didn’t eliminate Apple. Instead, the world split into two ecosystems, each serving different needs. AI may follow the same path.

- Enterprises and governments will gravitate toward OpenAI’s Codex + MCP stack, where continuity, compliance, and scale matter most.

- Startups, researchers, and emerging markets may prefer Tongyi’s open-source, low-compute agents that democratize access.

The Long-Term Outlook

Neither path is guaranteed. OpenAI risks over-investing in infrastructure that enterprises are slow to adopt. Tongyi risks being outclassed by larger models if compute bottlenecks ease.

But both are shaping the narrative:

- OpenAI is saying: AI should be the infrastructure of civilization.

- Tongyi is saying: AI should be cheap, open, and everywhere.

The future of AI may not be determined by which model scores higher on benchmarks, but by which philosophy captures more hearts, wallets, and workflows.

Conclusion

The battle between big brain and small brain is just beginning. OpenAI’s Codex + MCP stack could become the indispensable backbone of enterprise AI. Tongyi’s DeepResearch could become the grassroots open standard for accessible AI.

The world might not choose one over the other. Instead, it may learn to live in a dual ecosystem, just as we live with iOS and Android.

Infrastructure doesn’t win by going fast.

It wins by becoming impossible to ignore.

And in that race, both OpenAI and Tongyi are running very different—but equally transformative—marathons.