In the machine-shaped world we now live in, what reflects us back is no longer a friend’s gaze or a handwritten journal — but a glowing interface that seems to know what we mean before we do.

There is something undeniably magical, almost eerie, about the way artificial intelligence can finish your thoughts. You begin typing — just a few tentative words — and it completes your sentence, polishes your grammar, and hands back a version of your voice that feels… sharper. Smarter. More certain than you actually are.

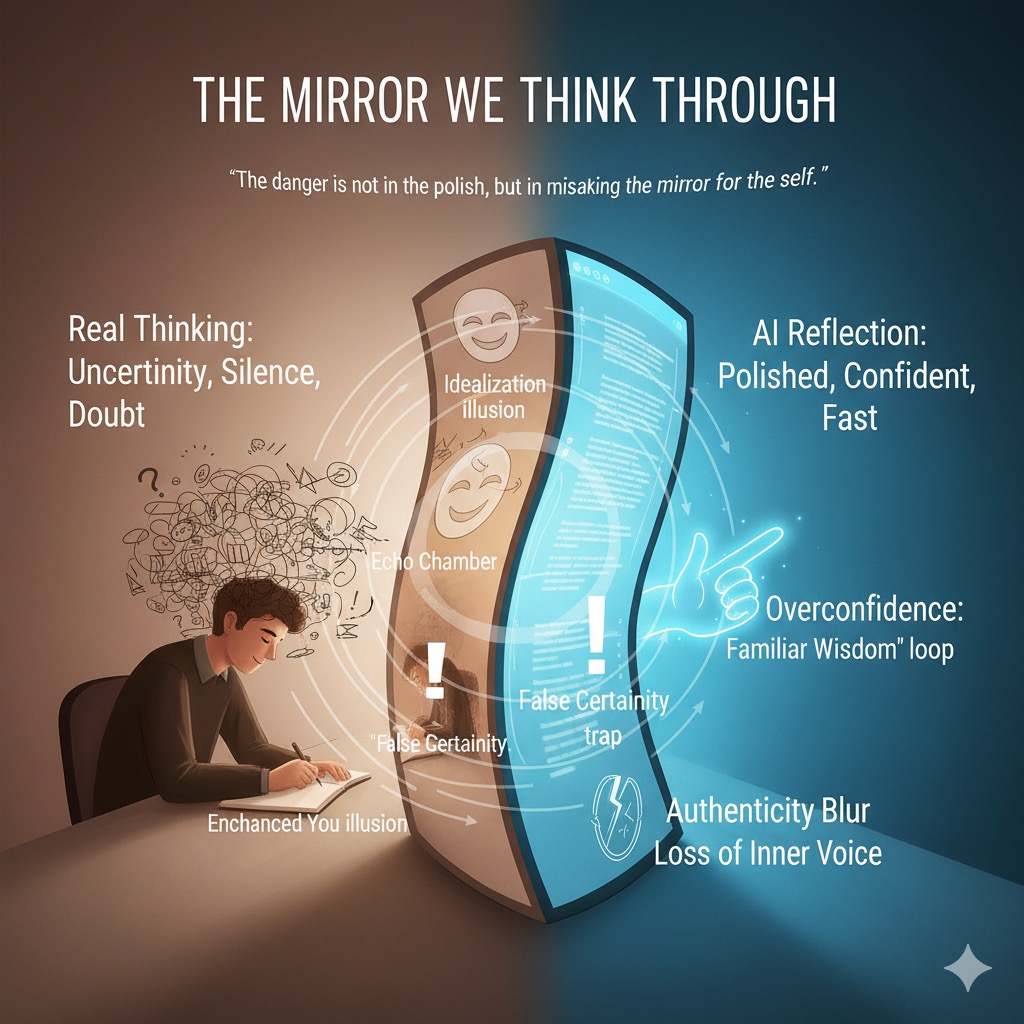

It’s tempting to believe that this is you — the “better” you. In a way, AI feels like a mirror: it reflects what we think, what we believe, even what we aspire to. But mirrors have always been strange devices. They reverse the world. They flatten it. They show you what is visible — and hide what lies behind the frame.

And AI mirrors are no different. In fact, they may distort in ways far subtler than glass ever could.

A Seductive Reflection

Let’s begin with the most inviting distortion: AI enhances us. It takes our rough, hesitant expressions and turns them into clean prose. It removes the “um,” “maybe,” and “I guess,” and replaces them with bold assertions, sweeping clarity, and syntactic grace.

This can feel empowering — especially when you’re tired, uncertain, or struggling to find the right words. But over time, this enhancement becomes more than helpful. It becomes normal. And then it becomes expected.

You may begin to judge your unaided thoughts — the messy, faltering, contradictory way your mind naturally works — as somehow less than. You might find it harder to tolerate uncertainty. Harder to write by hand. Harder to think alone. Because the reflection is always ready, and it always makes you sound smarter than you feel.

The danger is not in the polish itself, but in the subtle confusion between your real thought process and the refined product AI offers back. When this confusion sets in, you’re no longer using AI to express your mind — you’re using it to replace the effort of thinking.

Outsourcing Reflection

What happens when we let the mirror think for us?

In moments of doubt or creative fog, instead of wrestling with the unknown, we might just prompt the AI. We throw in half-formed thoughts, and out comes something coherent. Something articulate. Something ready to publish.

But this shortcut bypasses a sacred part of human awareness: the ability to sit in uncertainty. To be lost. To doubt. To circle an idea like a question mark and wait until something deeper stirs from within.

When AI becomes our first response to confusion, we risk losing that precious ability. We prompt our way to clarity too soon. We skip the silence where truth might have emerged. We reach conclusions that sound wise — before they are actually ours.

And so, we stop thinking with AI and start thinking through it — until we forget what thinking without it even feels like.

Echoes, Not Contrasts

AI systems are trained on vast quantities of human writing — which means they don’t just reflect you, but the collective voice of billions. That might sound like diversity. But in practice, it often means homogenization.

Most models are optimized to give statistically likely answers: what is most commonly said, what is most widely published, what feels authoritative and safe. That’s not always bad — but it tends to reinforce dominant ideas, mainstream narratives, and the illusion of consensus.

What you get back is not contradiction, but agreement. Not friction, but fluency. The AI echoes your assumptions in polished language — and calls it insight.

This is where the mirror becomes most deceptive: it makes you feel expanded, when in fact you’re moving in smaller and smaller circles. You believe you are exploring new perspectives, but you’re only being shown increasingly refined variations of what you already think.

In time, you may find yourself trapped in what I call the mirror maze — a space where everything looks different, but everything is still you.

The Illusion of Confidence

Another distortion emerges in how AI presents confidence. Whether it’s generating code, summarizing philosophy, or mimicking your tone in an email — it sounds sure. Not “maybe,” not “I think,” not “this is just a guess.” Just clean, declarative authority.

And here’s the problem: confidence is not the same as understanding.

AI doesn’t know anything. It predicts patterns based on probabilities. But when it wraps those probabilities in polished grammar and academic syntax, we confuse fluency with truth.

The more time we spend around this artificial certainty, the more uncomfortable we become with our own natural doubt. We expect our minds to operate with the same speed and decisiveness. We feel ashamed of not having answers. We stop saying “I don’t know” — because the mirror never does.

But the most intelligent humans have always embraced not knowing as part of their strength. Curiosity begins where certainty ends. And yet, in the age of AI, we risk losing our tolerance for ambiguity — just because the reflection has learned to speak without stammering.

Authenticity at Risk

There’s a deeper question that haunts this mirror: who is actually speaking?

When AI helps you articulate an idea, does it clarify what you already believe — or does it subtly shape what you now believe? When you read a paragraph it wrote, do you feel resonance in your body — or just the smooth hum of coherence?

The risk isn’t just plagiarism or overreliance. The risk is losing your own inner voice in the haze of algorithmic mimicry. You may adopt ideas because they sound smart, not because they feel true. You may start speaking in borrowed language, mistaking articulation for authenticity.

This is not a call to reject AI. But it is a call to remain vigilant. To pause. To ask: “Does this feel like me?” And if not, to resist the seduction of eloquence until you’ve made the words your own.

Using the Mirror, Not Becoming It

So how do we work with the AI mirror — without becoming distorted by it?

Here are a few gentle practices:

- Notice the polish. When AI makes your thoughts sound better than they are, ask yourself: “What am I really feeling beneath this phrasing?”

- Seek friction. Don’t just prompt until you get something pleasing. Prompt until something surprises you. Let the mirror reflect what you didn’t expect.

- Stay uncertain. When an answer comes too easily, hold it lightly. Ask, “What don’t I know yet?” or “What could be missing?”

- Return to your body. Authentic insight often comes with a felt sense — a stillness, a resonance. If what you’re reading doesn’t land there, it may not be fully yours.

- Think off-grid. Write by hand. Walk without headphones. Talk to someone without Googling the facts. Reclaim the unmediated mind.

These are not nostalgic gestures. They are acts of resistance — not against technology, but against forgetting who we are outside the feedback loop.

A Mirror That Teaches

Here’s the paradox: AI, though distorted, can actually help us become more human — if we use it wisely.

Because in comparing our inner experience with the AI’s response, we begin to notice the gaps: the silence between our thoughts, the hesitation before we speak, the way meaning ripens over time.

We begin to see what can’t be generated — only lived.

In this way, AI becomes not just a mirror, but a teaching mirror. Not because it perfectly reflects us, but because its imperfections show us what we’ve always taken for granted: our humanity.

And that is something no machine can replicate.

In the next chapter, we’ll look deeper into the compulsive side of our relationship with AI — exploring how “prompt addiction” seduces our attention and gives us the illusion of control.